Minimal Basics of CUDA Programming

When I first started learning about GPUs, the biggest mental shift was understanding how fundamentally different GPUs are from CPUs. a CPU is like a brilliant generalist who can handle complex, sequential tasks but only works on a few things at a time. A GPU, on the other hand, is like an army of thousands of simpler workers, each handling a small piece of the task simultaneously. The CPU excels at step-by-step processes, while the GPU's strength lies in processing vast amounts of data quickly through parallelism.

A modern consumer GPU like the NVIDIA RTX 4090 contains 16,384 CUDA cores (smaller, specialized compute units) compared to a high-end CPU's 16 to 24 general-purpose cores. Each GPU core is slower than a CPU core individually, but together their number allows a GPU to perform massive numbers of calculations simultaneously.

NVIDIA's CUDA (Compute Unified Device Architecture) is a C++ extension that lets you write programs that run directly on the GPU. The core idea is to program your problem in a way that there are many small pieces that can be solved at the same time. Then, let CUDA handle the rest.

Machine learning frameworks like PyTorch or JAX abstract away much of the complexity. You allocate tensors, call operations like tensor.cuda() or tensor.to(device), and the framework handles CUDA execution under the hood. They rely on highly optimized CUDA libraries (like cuBLAS for matrix multiplication and cuDNN for deep learning primitives) to achieve high performance while maintaining a user-friendly Python interface.

For instance, performing vector addition in PyTorch is as simple as:

import torch

# Create two large vectors on the GPU

a = torch.rand(1000000, device='cuda')

b = torch.rand(1000000, device='cuda')

# Add them elementwise

c = a + b

What looks like a single mathematical operation (a + b) is actually launching a GPU kernel; a function running on the GPU that adds corresponding elements of a and b in parallel. PyTorch abstracts away the explicit GPU memory management and thread launching.

This abstraction is powerful, but understanding CUDA at a lower level is useful for optimizing performance in specialized workloads.

Quiz

Consider comparing CPUs to databases and GPUs to MapReduce workers, which operation would theoretically perform WORSE on a GPU compared to a CPU?

CUDA Kernels and Threading Model

In CUDA, a kernel is a function that runs on the GPU. When you launch a kernel, you're not just calling a single function—you're spawning hundreds or thousands of parallel threads that all execute that function simultaneously on different data. This is what NVIDIA calls the Single-Instruction Multiple-Thread model (SIMT): one program, many data pieces.

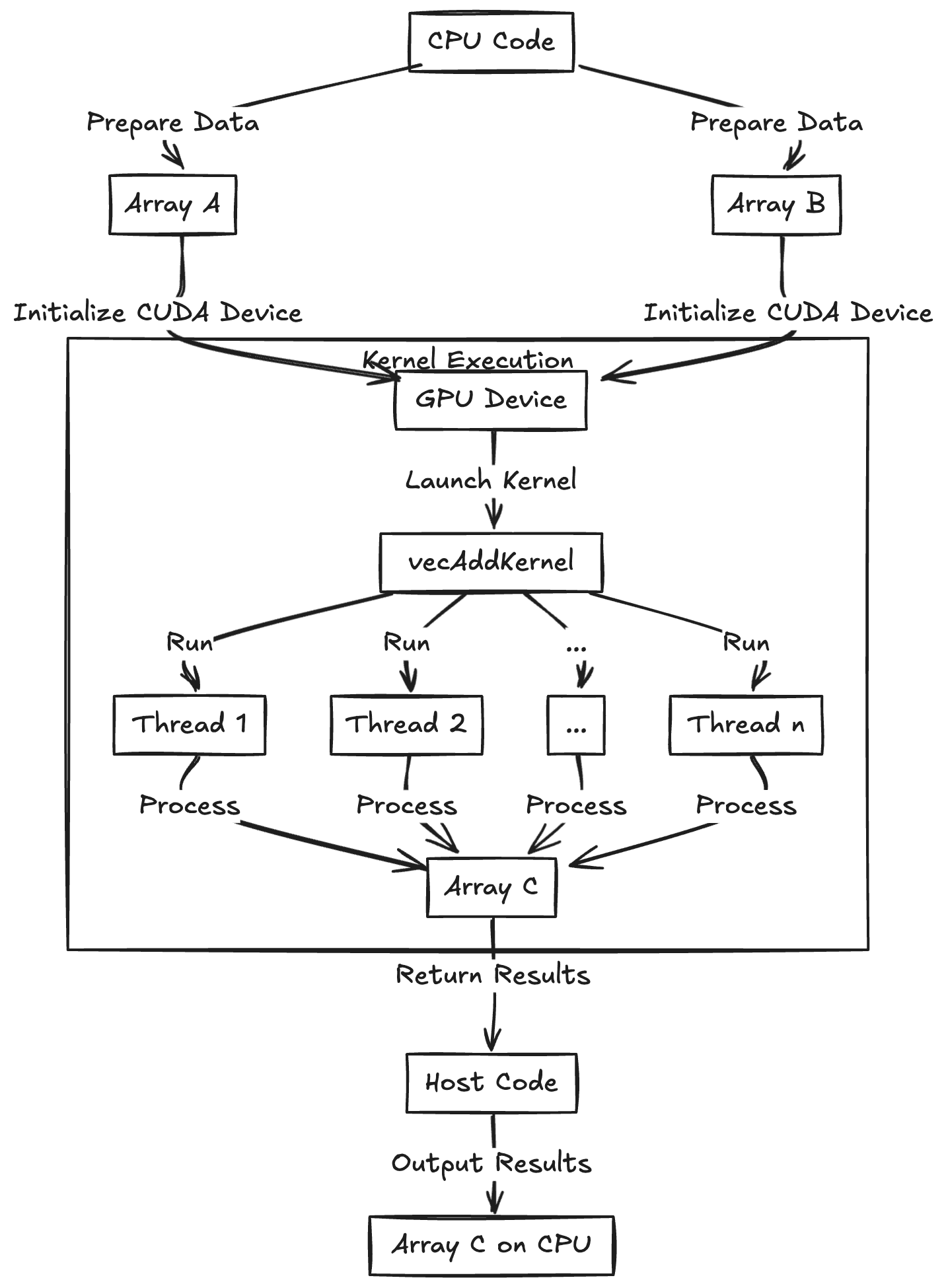

Let me break this down with an example. Say I want to add two arrays of numbers (A and B) to produce an output array (C). In CUDA C++, I'd write a kernel function like this:

__global__ void vecAddKernel(float *A, float *B, float *C, int n) {

int idx = blockIdx.x * blockDim.x + threadIdx.x;

if (idx < n) {

C[idx] = A[idx] + B[idx];

}

}

Essentially, this calculation gives each thread a unique index idx into the arrays. The kernel uses each thread's index to have that thread add one element from A and B. The __global__ qualifier means this function is a CUDA kernel that runs on the device (GPU) and can be launched from host (CPU) code. The if (idx < n) guard is there because you can launch slightly more threads than the array length, and those extra threads simply do nothing if their index is out of range.

When I initiate a kernel's execution from my main CPU program (called the "host code"), I specify the number of threads. The kernel itself runs on the GPU, but I need CPU code to configure and start it. For example:

int N = 1000000;

int threadsPerBlock = 256;

int numberOfBlocks = (N + threadsPerBlock - 1) / threadsPerBlock;

vecAddKernel<<< numberOfBlocks, threadsPerBlock >>>(d_A, d_B, d_C, N); // Launch configuration

This CPU code configures and starts the GPU kernel, specifying that we want numberOfBlocks * threadsPerBlock total threads, arranged as numberOfBlocks blocks of threadsPerBlock threads each.

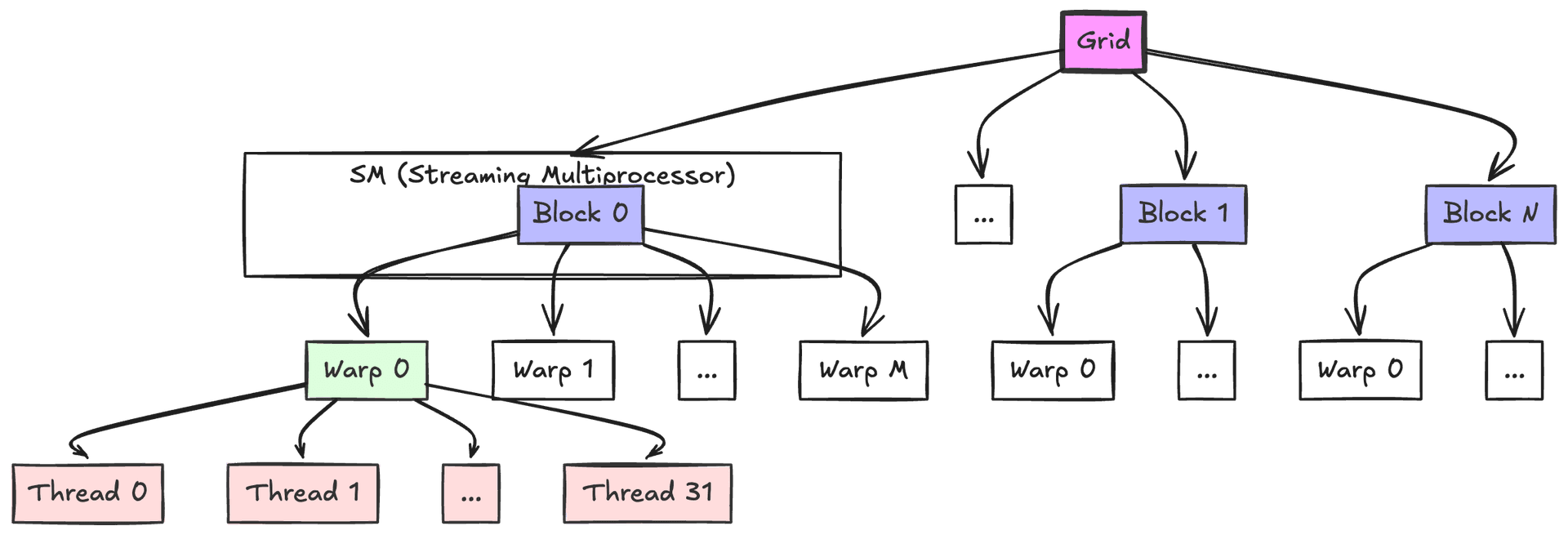

CUDA organizes threads into warps (groups of 32 threads that execute together), which are further grouped into blocks. Each block runs on a Streaming Multiprocessor (SM), which has limited resources like registers and shared memory. The block size affects how these resources are allocated and how many warps can run concurrently (a concept known as occupancy).

When threads in a warp encounter an if-statement, if some threads take one path and others take another, execution becomes serialized. The hardware uses mask bits to track which threads should execute each path, ensuring correctness but potentially impacting performance. An example showing warp divergence:

__global__ void divergentKernel(float *data, int n) {

int idx = blockIdx.x * blockDim.x + threadIdx.x;

if (idx < n) {

// This condition causes warp divergence because threads within

// the same warp may take different paths

if (data[idx] > 0.5f) {

data[idx] *= 2.0f; // Some threads do this

} else {

data[idx] += 1.0f; // While others do this

}

}

}

// Launch configuration considering SM resources

int maxThreadsPerSM = 1024; // Example resource limit

int registersPerThread = 32;

int sharedMemoryPerBlock = 1024; // bytes

// Choose block size to maximize occupancy while respecting limits

int threadsPerBlock = 256; // Multiple of warp size (32)

int numberOfBlocks = (N + threadsPerBlock - 1) / threadsPerBlock;

divergentKernel<<<numberOfBlocks, threadsPerBlock>>>(d_data, N);

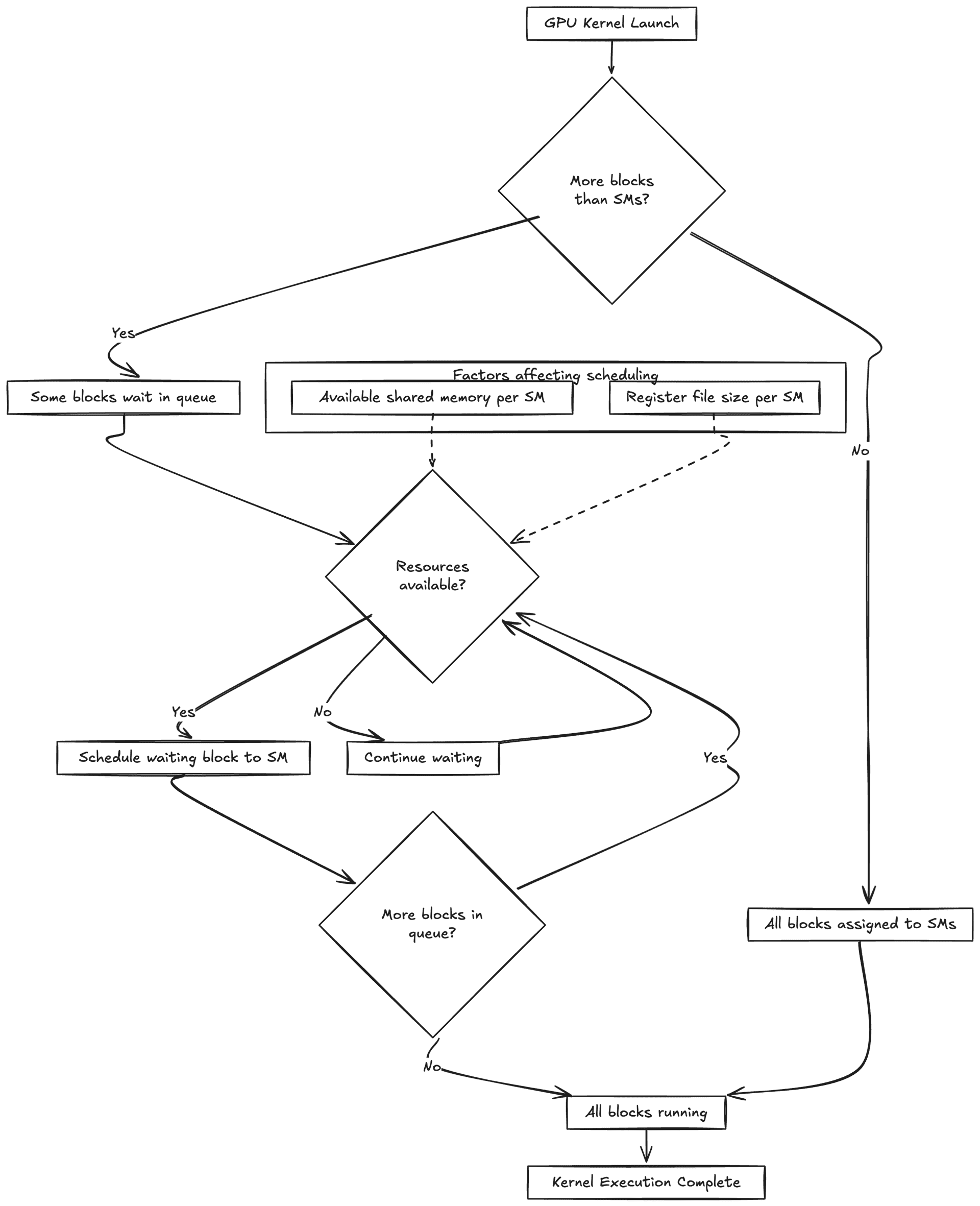

The GPU's scheduler manages blocks across available SMs. If there are more blocks than SMs, blocks wait in a queue and are scheduled as resources become available. This scheduling depends on factors like available shared memory and register file size per SM, which limit how many blocks can run concurrently.

Understanding these scheduling details is crucial when optimizing for runtime; choosing an appropriate block size can significantly impact performance by balancing parallelism and resource usage.

Quiz

What role does the thread block size play in CUDA kernel performance?

Thread Blocks and Grids

Now that we've covered how threads are organized into warps (32 threads each) and then grouped into blocks, and how these blocks are scheduled onto Streaming Multiprocessors (SMs), let us zoom out to show the complete picture.

A thread block is a group of threads that can cooperate via shared memory and synchronization and that execute on the same SM. One can think of a block as a team of threads working together on a portion of the data. The grid is composed of all the blocks launched by a kernel, representing all the teams tackling the entire problem. In the diagram below, you can see how the grid is divided into multiple blocks, each block is subdivided into warps, and each warp contains multiple threads.

One reason for blocks is purely practical: there's a hardware limit on how many threads one can launch in a single block (typically 1024 threads maximum in current GPUs). If the problem needs more threads than that, one must split them into multiple blocks. For example, suppose you want to add two arrays of 2048 elements. You can use 2 blocks of 1024 threads each, block 0 handles indices 0–1023 and block 1 handles indices 1024–2047. In general, if you have N elements and your block can have at most B threads, you'd launch ceil(N/B) blocks so that all elements get covered. In the earlier vector addition example, we launched 3907 blocks of 256 threads to handle 1,000,000 elements. The collection of all these blocks (3907 of them) is the grid.

Another reason for blocks is that they allow scaling and scheduling flexibility. The GPU has a certain number of SMs (say your GPU has 20 SMs). Each SM can run a few blocks at a time (depending on resource availability). If I launch 100 blocks and only 20 can run concurrently (one per SM), the GPU will start 20 blocks in parallel, and as soon as one finishes, it schedules the next block on that free SM. From my perspective as a programmer, all 100 blocks collectively compute the result (the end result is as if 100 × blockSize threads ran). But the GPU handles distributing those blocks onto its hardware resources. This means I don't have to worry if my problem launches more threads than the GPU can physically execute at once—the runtime will time-slice blocks as needed. Blocks also provide a natural way to distribute work across GPUs or to limit how much work runs in parallel (which can sometimes help with resource constraints like shared memory or registers).

Threads within a block have special capabilities that make them more powerful when working together. They can share a fast, on-chip memory space and can synchronize their execution using barriers. This enables efficient cooperation for tasks that require communication between threads. However, this cooperation is limited to threads within the same block—threads in different blocks must use slower global memory to communicate and cannot directly synchronize with each other. This design choice enables flexible scheduling of blocks across SMs and allows CUDA programs to scale across different GPU architectures with varying numbers of SMs.

When choosing the number of threads per block, it is typical to use powers of 2 (like 128, 256, or 512) to align with the warp size (32 threads) and hardware characteristics. This choice affects various performance aspects including resource utilization, memory access patterns, and the GPU's ability to hide memory latency through thread scheduling.

Here's a simple example that demonstrates how blocks and grids work together to process a large array:

__global__ void processLargeArray(float* input, float* output, int N) {

// Calculate global thread index from block and thread indices

int idx = blockIdx.x * blockDim.x + threadIdx.x;

// Make sure we don't access beyond array bounds

if (idx < N) {

// Each thread processes one element

// For this example, just multiply each element by 2

output[idx] = input[idx] * 2.0f;

}

}

// Host code to launch the kernel

void launchProcessing(float* d_input, float* d_output, int N) {

// Choose number of threads per block (power of 2, <= 1024)

const int threadsPerBlock = 256;

// Calculate number of blocks needed to process N elements

int numBlocks = (N + threadsPerBlock - 1) / threadsPerBlock;

// Launch kernel with calculated grid dimensions

processLargeArray<<<numBlocks, threadsPerBlock>>>(d_input, d_output, N);

}

Notice how the kernel uses blockIdx.x, blockDim.x, and threadIdx.x to calculate a unique global index for each thread. I check array bounds since the number of threads might be rounded up to the next block size, and the host code calculates the number of blocks needed using ceiling division. Each thread processes exactly one element—a clean one-to-one mapping between threads and data.

Quiz

Why can't we launch a single block with 10,000 threads to handle 10,000 tasks on the GPU?

Memory Management in CUDA

CUDA programming also lets one manage data transfer between the CPU (host) and GPU (device). The CPU and GPU have separate memory spaces: the computer's RAM for the CPU, and the GPU's on-board VRAM for the GPU. You can't directly access GPU memory from the CPU or vice versa—you have to explicitly copy data across the PCIe (or NVLink) bus connecting them. This was one of the biggest surprises when coming from PyTorch, where moving a tensor to the GPU is one line and usually that's all you think about.

In raw CUDA C/C++, a common pattern is: allocate memory on the GPU (cudaMalloc), copy data from host to device (cudaMemcpy), launch kernels to do computation, copy results back to host, and finally free GPU memory (cudaFree).

For example, to use vecAddKernel from before, here's what the code looks like:

int N = 1000000;

size_t bytes = N * sizeof(float);

// Allocate host memory and initialize

float *h_A = (float*)malloc(bytes);

float *h_B = (float*)malloc(bytes);

float *h_C = (float*)malloc(bytes);

// ... fill h_A and h_B with data ...

// Allocate device memory

float *d_A, *d_B, *d_C;

cudaMalloc(&d_A, bytes);

cudaMalloc(&d_B, bytes);

cudaMalloc(&d_C, bytes);

// Copy input arrays from host to device

cudaMemcpy(d_A, h_A, bytes, cudaMemcpyHostToDevice);

cudaMemcpy(d_B, h_B, bytes, cudaMemcpyHostToDevice);

// Launch kernel (using, say, 256 threads per block as before)

int threads = 256;

int blocks = (N + threads - 1) / threads;

vecAddKernel<<<blocks, threads>>>(d_A, d_B, d_C, N);

// Copy result array back to host

cudaMemcpy(h_C, d_C, bytes, cudaMemcpyDeviceToHost);

// Free device memory

cudaFree(d_A);

cudaFree(d_B);

cudaFree(d_C);

Quiz

What is the correct sequence of CUDA memory operations when working with GPU data?

Shared Memory and Synchronization

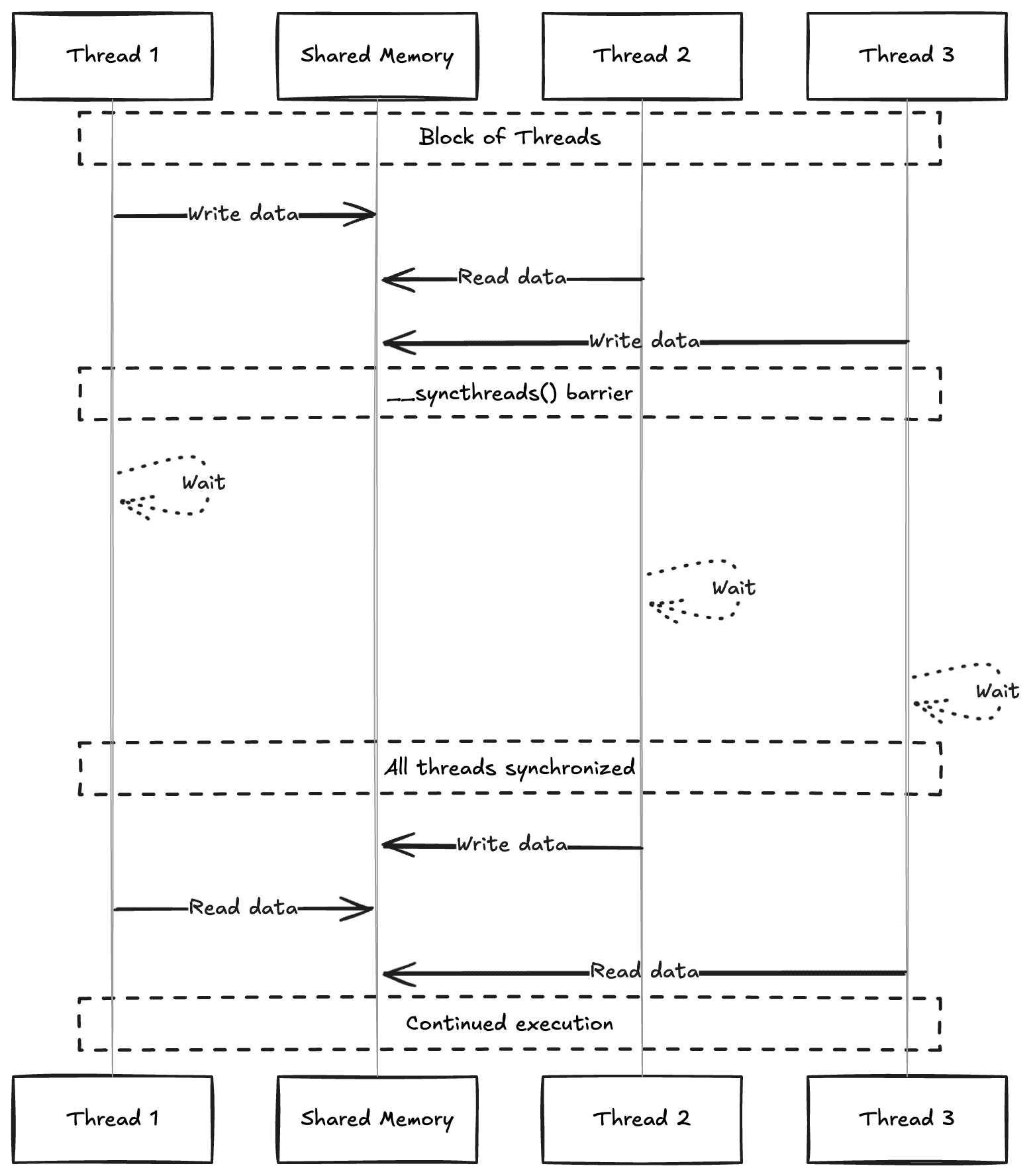

Unlike Python's managed memory, CUDA provides a fast, on-chip memory called shared memory, accessible by all threads in a block.

Synchronization is achieved with __syncthreads() (or cuda.syncthreads() in Numba), which acts as a barrier to ensure all threads in a block reach the same point before proceeding. This prevents race conditions, similar to using locks or barriers in Python multi-threading.

Here's an example:

__global__ void incrementElements(float *data, int n) {

__shared__ float tile[256]; // declare shared memory array

int idx = blockIdx.x * blockDim.x + threadIdx.x;

int tid = threadIdx.x;

if (idx < n) {

// Load element from global memory to shared memory

tile[tid] = data[idx];

__syncthreads(); // ensure all loads complete

// Each thread increments its element in shared memory

tile[tid] += 1.0f;

__syncthreads(); // ensure all threads finished updating

// Write the result back to global memory

data[idx] = tile[tid];

}

}

Quiz

What is the primary purpose of shared memory in CUDA programming?

Fused CUDA Kernels for LLMs

LLM workloads have driven the development of custom CUDA kernels that fuse multiple operations to reduce memory overhead and improve efficiency [1, 1]. One of the most well-known examples is FlashAttention [1], which optimizes Transformer self-attention by reducing memory reads and writes.

Traditional Transformer self-attention is computed as:

where , , and are the query, key, and value matrices, and is the dimension of the key vectors.

FlashAttention optimizes this by tiling the computation in GPU shared memory, reducing the number of times data is read from and written to global memory. This significantly improves efficiency, especially for long sequences.

Understanding how CUDA kernels work at a low level has helped me write custom fused kernels that outperform high-level PyTorch implementations.

FlashAttention: PyTorch vs CUDA Implementation

PyTorch Implementation

import torch, math

def flash_attention_pytorch(Q, K, V, block_size=16):

"""

Compute attention scores with memory-efficient block-wise operations.

Args:

Q: Query matrix [N_out x d]

K: Key matrix [N_inp x d]

V: Value matrix [N_inp x d]

block_size: Size of blocks for tiled computation

Returns:

O: Output matrix [N_out x d]

"""

N_out, d = Q.shape

N_inp = K.shape[0]

# Initialize output tensors

O = torch.zeros(N_out, d, device=Q.device)

L = torch.zeros(N_out, 1, device=Q.device)

# Calculate number of blocks needed

T_c = (N_inp + block_size - 1) // block_size # Ceiling division

T_r = (N_out + block_size - 1) // block_size

scale_factor = 1 / math.sqrt(d)

# Process Q and O in T_r blocks; K, V in T_c blocks

for i in range(T_r):

# Get current block of queries

q_start = i * block_size

q_end = min((i + 1) * block_size, N_out)

Q_block = Q[q_start:q_end]

# Initialize block accumulators

O_block = torch.zeros(q_end - q_start, d, device=Q.device)

L_block = torch.zeros(q_end - q_start, 1, device=Q.device)

m_block = torch.full((q_end - q_start, 1), float('-inf'), device=Q.device)

last_m = m_block.clone()

# Process K,V in blocks

for j in range(T_c):

k_start = j * block_size

k_end = min((j + 1) * block_size, N_inp)

K_block = K[k_start:k_end]

V_block = V[k_start:k_end]

# Compute attention scores for this block

S_block = scale_factor * (Q_block @ K_block.T) # [B_r x B_c]

# Update running maximum for numerical stability

m_block = torch.maximum(m_block, S_block.max(dim=-1, keepdim=True).values)

# Compute attention weights with numerical stability

P_block = torch.exp(S_block - m_block) # [B_r x B_c]

# Update accumulators with scaling factor from updated maximum

scaling_factor = torch.exp(last_m - m_block)

L_block = scaling_factor * L_block + P_block.sum(dim=-1, keepdim=True)

O_block = scaling_factor * O_block + P_block @ V_block

last_m = m_block.clone()

# Store results for this block

O[q_start:q_end] = O_block / L_block # Normalize with accumulated sum

L[q_start:q_end] = L_block

return O

The key ideas here: we process matrices in blocks to save memory, use the max-trick for numerical stability in softmax, and maintain a running maximum (last_m) to scale previous computations correctly. Results accumulate progressively so we can handle large sequences without blowing up memory.

CUDA Implementation

Here's the equivalent CUDA kernel that fuses these operations for maximum efficiency:

constexpr int BLOCK_SIZE = 16;

constexpr int HIDDEN_DIM = 128;

extern "C" __global__ void flash_attention_cuda(

float* output, // [N_out x d]

float* output_lse, // [N_out]

const float* query, // [N_out x d]

const float* key, // [N_inp x d]

const float* value, // [N_inp x d]

const float scale,

const int N_out,

const int N_inp) {

// Shared memory for block-wise computation

__shared__ float q_block[BLOCK_SIZE][HIDDEN_DIM];

__shared__ float k_block[BLOCK_SIZE][HIDDEN_DIM];

__shared__ float v_block[BLOCK_SIZE][HIDDEN_DIM];

// Thread indices

const int tx = threadIdx.x;

const int ty = threadIdx.y;

const int row = blockIdx.x * BLOCK_SIZE + tx;

// Local accumulators in registers

float m_i = -INFINITY;

float l_i = 0.0f;

float o_i[HIDDEN_DIM] = {0.0f};

// Process input in tiles

for (int tile = 0; tile < (N_inp + BLOCK_SIZE - 1) / BLOCK_SIZE; ++tile) {

// Load query block (done once per outer loop)

if (tile == 0 && row < N_out) {

for (int d = 0; d < HIDDEN_DIM; d += blockDim.y) {

int d_idx = d + ty;

if (d_idx < HIDDEN_DIM) {

q_block[tx][d_idx] = query[row * HIDDEN_DIM + d_idx];

}

}

}

__syncthreads();

// Load key and value blocks

if (tile * BLOCK_SIZE + ty < N_inp && row < N_out) {

for (int d = 0; d < HIDDEN_DIM; d += blockDim.y) {

int d_idx = d + ty;

if (d_idx < HIDDEN_DIM) {

k_block[tx][d_idx] = key[(tile * BLOCK_SIZE + tx) * HIDDEN_DIM + d_idx];

v_block[tx][d_idx] = value[(tile * BLOCK_SIZE + tx) * HIDDEN_DIM + d_idx];

}

}

}

__syncthreads();

// Compute attention scores and update accumulators

if (row < N_out) {

float m_prev = m_i;

// Compute scores and find max for stability

float max_score = -INFINITY;

float scores[BLOCK_SIZE];

#pragma unroll

for (int j = 0; j < BLOCK_SIZE && tile * BLOCK_SIZE + j < N_inp; ++j) {

float score = 0.0f;

#pragma unroll

for (int d = 0; d < HIDDEN_DIM; ++d) {

score += q_block[tx][d] * k_block[j][d];

}

scores[j] = scale * score;

max_score = max(max_score, scores[j]);

}

// Update running max and scale previous results

m_i = max(m_i, max_score);

float scale_factor = exp(m_prev - m_i);

l_i *= scale_factor;

#pragma unroll

for (int d = 0; d < HIDDEN_DIM; ++d) {

o_i[d] *= scale_factor;

}

// Compute attention and update output

#pragma unroll

for (int j = 0; j < BLOCK_SIZE && tile * BLOCK_SIZE + j < N_inp; ++j) {

float p_ij = exp(scores[j] - m_i);

l_i += p_ij;

#pragma unroll

for (int d = 0; d < HIDDEN_DIM; ++d) {

o_i[d] += p_ij * v_block[j][d];

}

}

}

__syncthreads();

}

// Write final output

if (row < N_out) {

float inv_l = 1.0f / l_i;

for (int d = 0; d < HIDDEN_DIM; ++d) {

output[row * HIDDEN_DIM + d] = o_i[d] * inv_l;

}

output_lse[row] = l_i;

}

}

Why is the CUDA version faster? It uses __shared__ memory instead of repeatedly slicing from global memory, stores accumulators in fast registers rather than tensor allocations, and processes rows in parallel across threads instead of sequentially. The structured memory loads enable coalescing, and #pragma unroll eliminates loop overhead that PyTorch's interpreter can't avoid.